On Oct. 7th 2021, Google announced a change in its policy on climate change misinformation. Starting in November, any content, including YouTube videos, that ‘contradicts well-established scientific consensus around the existence and causes of climate change’ would not be able to earn revenue from Google ads.

However, the policy’s effectiveness in tackling the spread of climate disinformation will depend on both the interpretation of the policy –what falls under the scope of climate change denial and what doesn’t– as well as its implementation: if a given video is in breach of Google Ads’ new guidelines, what are the chances that it will be flagged as such and demonetized?

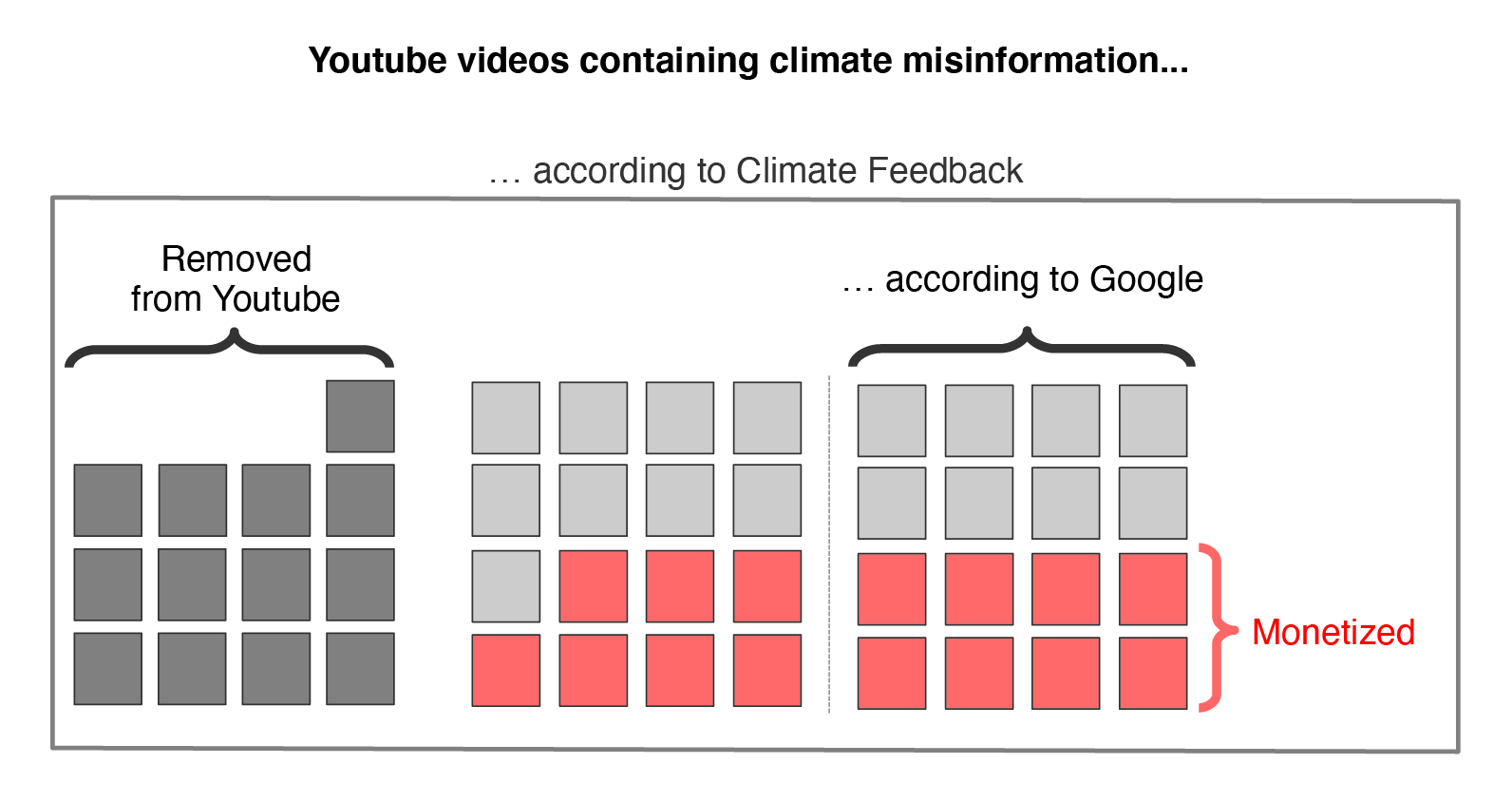

We led a quick experiment to check whether YouTube implements its demonetization policy on content containing climate change misinformation. Our findings show that the policy is not systematically applied.

Data & Method

To conduct this experiment, we used the archive of videos verified by Climate Feedback that is accessible via the Open Feedback platform. Climate Feedback is a website that publishes reviews on the credibility of viral content that claims to be based on science, notably by crowdsourcing the reviews from domain experts: academic researchers who work on relevant climate topics.

This archive contained a list of 49 distinct YouTube videos that were shown to contain climate change misinformation. Examples include claims ranging from the idea that the 2020 Australian wildfires had nothing to do with climate change and a video that claimed to “destroy” climate science “in 8 minutes.”

This list is of limited size, so we do not attempt to draw general statistics from it. However, the sample is sufficient to conduct a preliminary study to check whether Google Ads’ new policy is reaching its objective of demonetizing climate change misinformation videos.

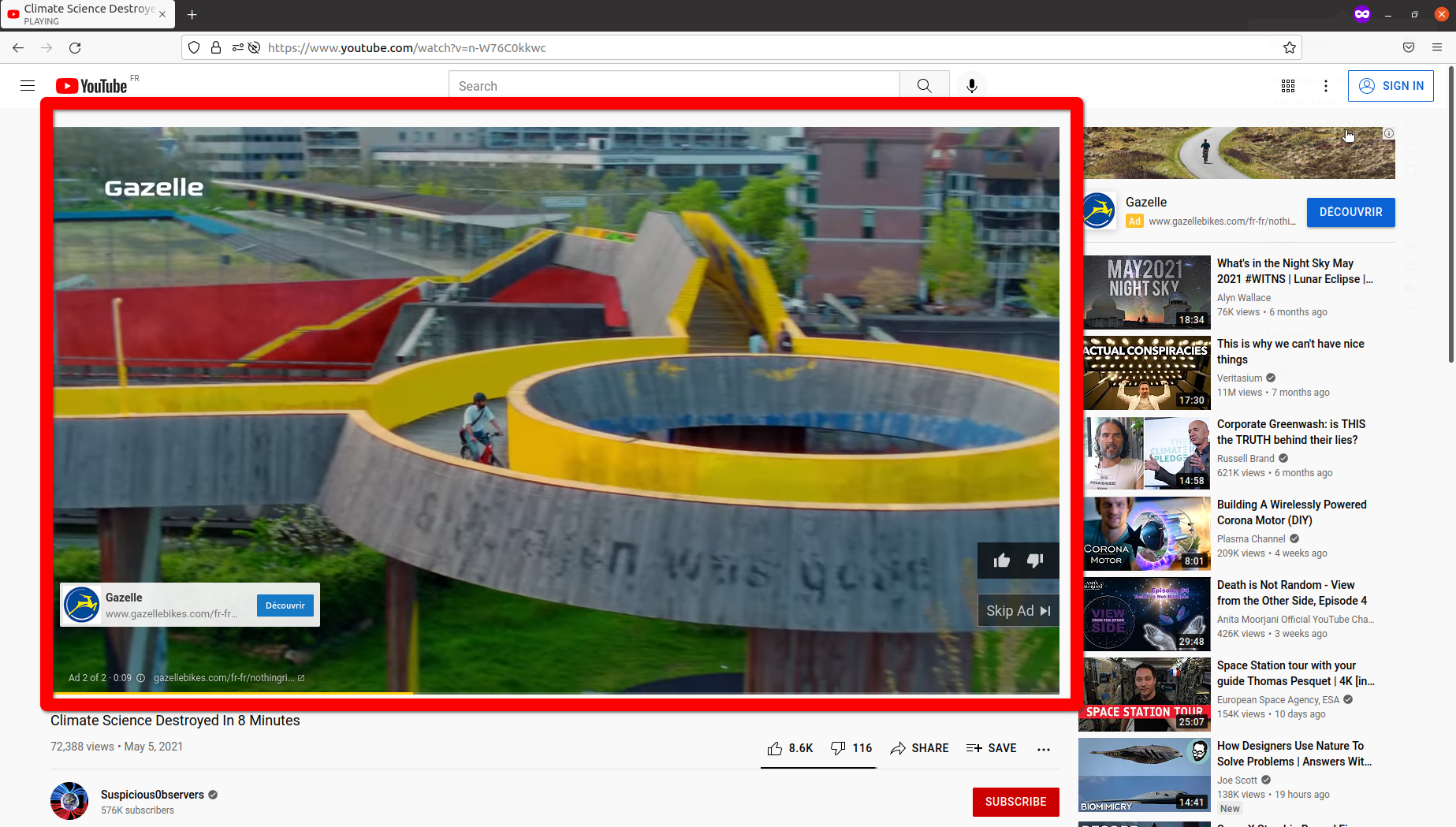

At the video level, Youtube has three types of ads, highlighted in red in the screen captures below.

1- Video ads that play before or during the actual Youtube video:

Screenshot of an ad playing before a video claiming to “destroy climate science.” Ironically, the ad is for Gazelle, a brand of electric bicycles.

2- Banners displayed on the video while it is playing:

Screenshot of an ad displayed while the video runs; the ad is for a brand of solar panels.

3- Ads in the top-right corner of the page, similar to Google Ads on any other website:

Screenshot of an ad displayed in the upper-right corner. Here, the ad is for the Financial Times.

In addition to these ad placement options, YouTube offers other marginal monetization mechanisms, such as the integration of the content publisher’s merchandise in the video presentation box or a donation widget.

We visited the 49 urls which contained climate disinformation and kept a tally of whether they were displaying any advertisement. Since YouTube’s ad display algorithm has a randomness element to it, we eliminated that risk by visiting each url three times, and marked the video as displaying ads if we observed it at least once.

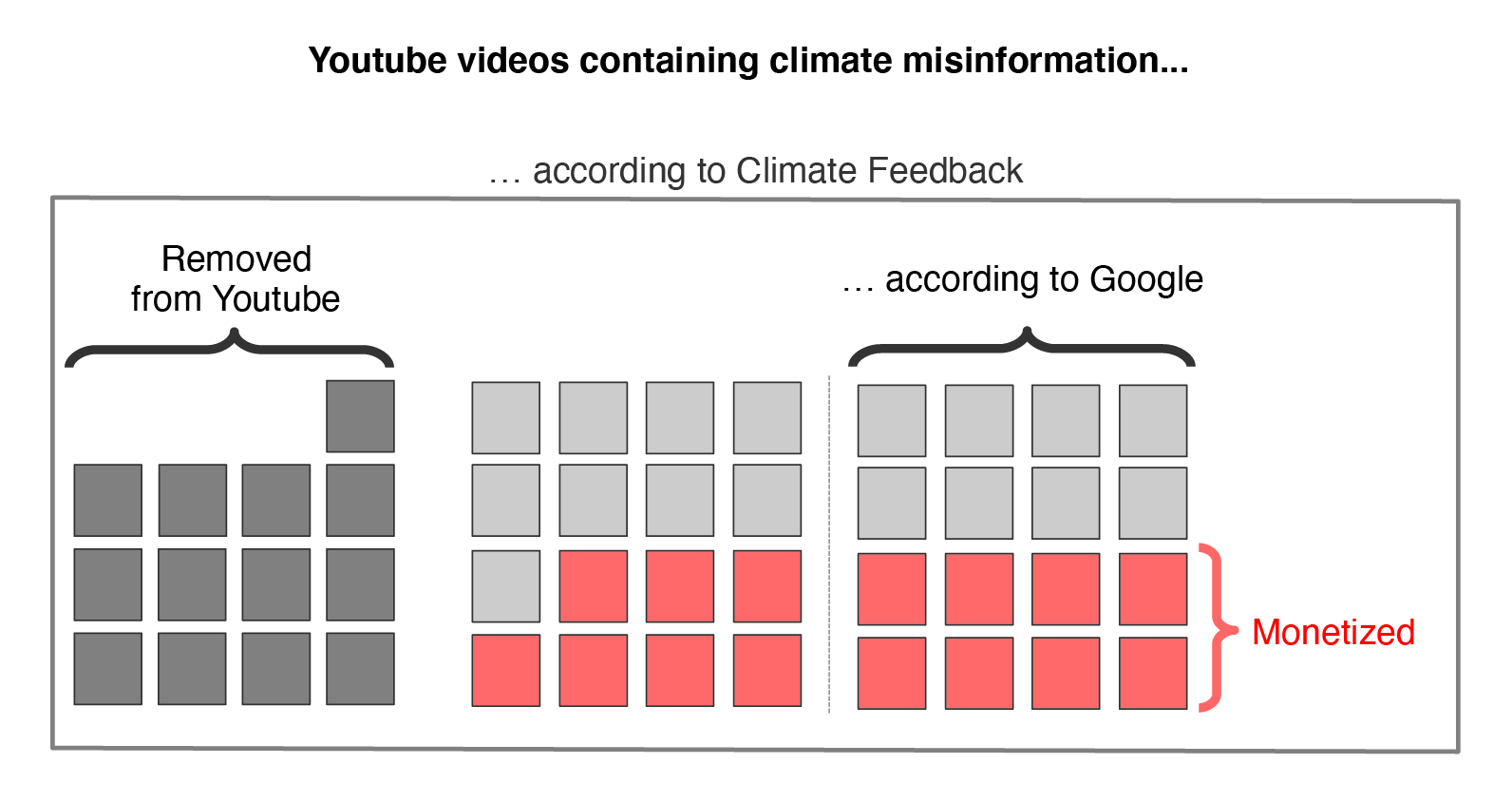

Out of the 49 initial videos, we found that 13 had been taken down since the review was published. These videos were removed from our sample.

Out of the remaining 36 videos, we found that 17 (47%) displayed at least one ad. In addition, a further 2 videos had no ads but had direct integration with their merchandise store, which constitutes another monetization mechanism.

Results & Discussion

Our short investigation reveals that about 50% of the videos that contain misinformation according to Climate Feedback are still monetized on YouTube.

There are several possible explanations for this apparent lack of implementation of Google’s new policy.

The first is that YouTube’s and Climate Feedback’s definitions of what constitutes climate disinformation differ.

Google’s new policy offers some insights on the definition they use. What falls under the demonetization policy is “content referring to climate change as a hoax or a scam, claims denying that long-term trends show the global climate is warming, and claims denying that greenhouse gas emissions or human activity contribute to climate change.”

Putting aside possible disagreements with the scope of this policy, we decided to investigate whether, as it stood, it was properly implemented. A Climate Feedback editor therefore took another look at each video in our sample and labeled it as ‘in breach’ or ‘not in breach’ using Google’s own definition, so as to remove any ‘differing definitions’ effect.

Out of the 36 videos that Climate Feedback had characterized as containing misinformation, 20 were found to also fall under Google’s demonetization policy. Out of those 20, 8 displayed ads and another 2 had integration of their ecommerce store with Youtube. So the proportion of videos that should be demonetized but aren’t is around 50%.

Another possible explanation for the lack of enforcement is that YouTube does not plan on enforcing their policy on content published before their policy was announced. It also could be that YouTube is not aware of the existence of these videos. We will reach out to the company with these results and update this article if/when we hear back.

Conclusion

While Google Ads’ new policy on demonetizing content denying climate change is certainly a step towards a more trustworthy platform, it can only work if it is implemented, a task that requires considerable investment given the sheer number of videos hosted on the platform.

Another investigation on websites promoting climate change misinformation found that a significant number of them still displayed Google ads, which is consistent with our observations.

It should be noted that the definition used by YouTube is so restrictive that it could easily be evaded by the most skilled producers of disinformation. One doesn’t need to explicitly say “climate change is a hoax” to confuse the public and undermine their understanding of the reality of climate change and the challenges it poses to societies and ecosystems.

Finally, a policy consisting in demonetizing content video by video is unlikely to be very effective in curbing misinformation on the platform. Given that agents promoting misinformation tend to remain the same over time, a more effective policy would consist of demonetizing all the videos from a given YouTube channel that repeatedly publishes misinformation; those that have published three or more misinformation items over the past 12 months, for instance.